What Can the CyberDesserts AI Learning Assistant Do?

I write about prompt injection attacks on this blog. It felt hypocritical to explain threats I had never actually defended against.

So I built one. The CyberDesserts Learning Assistant captures everything I wanted to learn about. You can ask it about threats, defences, career paths, tools, or incident response. But this post is not just about the tool. It is about what building it taught me while I am in my happy place.

It Started Simple

The original idea was modest: "What if I could ask questions about my blog?" A searchable knowledge base powered by AI. Wouldn't that be cool.

That simple question turned into months of research, experimentation, and iteration, during my weekends, and I had a ball. Building a RAG pipeline from scratch against an LLM forced me to confront every AI security challenge I had only understood theoretically. Prompt injection, data poisoning, hallucination mitigation. All of it became real.

Go straight to the AI powered Learning Assistant

Data Quality Is Everything

The bit I hated when it went wrong and had to start again, I spent more time curating and parsing knowledge sources than I did on any visible feature. Figuring out and testing chunking methodologies for my blog articles was a learning curve.

The assistant draws from this blog, MITRE ATT&CK mappings, ransomware group intelligence, industry advisories, and security reports. Structuring that data correctly, cleaning inputs, tagging metadata, preventing the model from confidently making things up. Every time I thought I had cracked it, I would ingest new data break the vector db or watch the same hallucinations resurface.

The flashy parts of AI get all the attention. The boring data work determines whether the system is actually useful.

"I Don't Know"

Getting the assistant to admit uncertainty was harder than getting it to sound authoritative.

LLMs are trained to be helpful. They want to give you an answer. Teaching a model to say "I don't have information on that" instead of fabricating something plausible took more prompt engineering than I expected.

This matters for security tooling. A learning assistant that hallucinates is worse than no assistant at all. If it confidently tells you a CVE is patched when it is not, or misattributes a threat actor, you are worse off than before you asked.

Grounding responses in real sources is everything. That is why every response cites its sources, so you can verify.

Prompt Engineering Is Part Science, Part Art

Small changes in how you instruct the model can completely change response quality.

I have rewritten the core prompts dozens of times. Each iteration taught me something new about how these models process instructions. Word choice matters. Instruction order matters. Explicit constraints matter more than implicit expectations.

It is endlessly fascinating and occasionally maddening.

What the Assistant Does

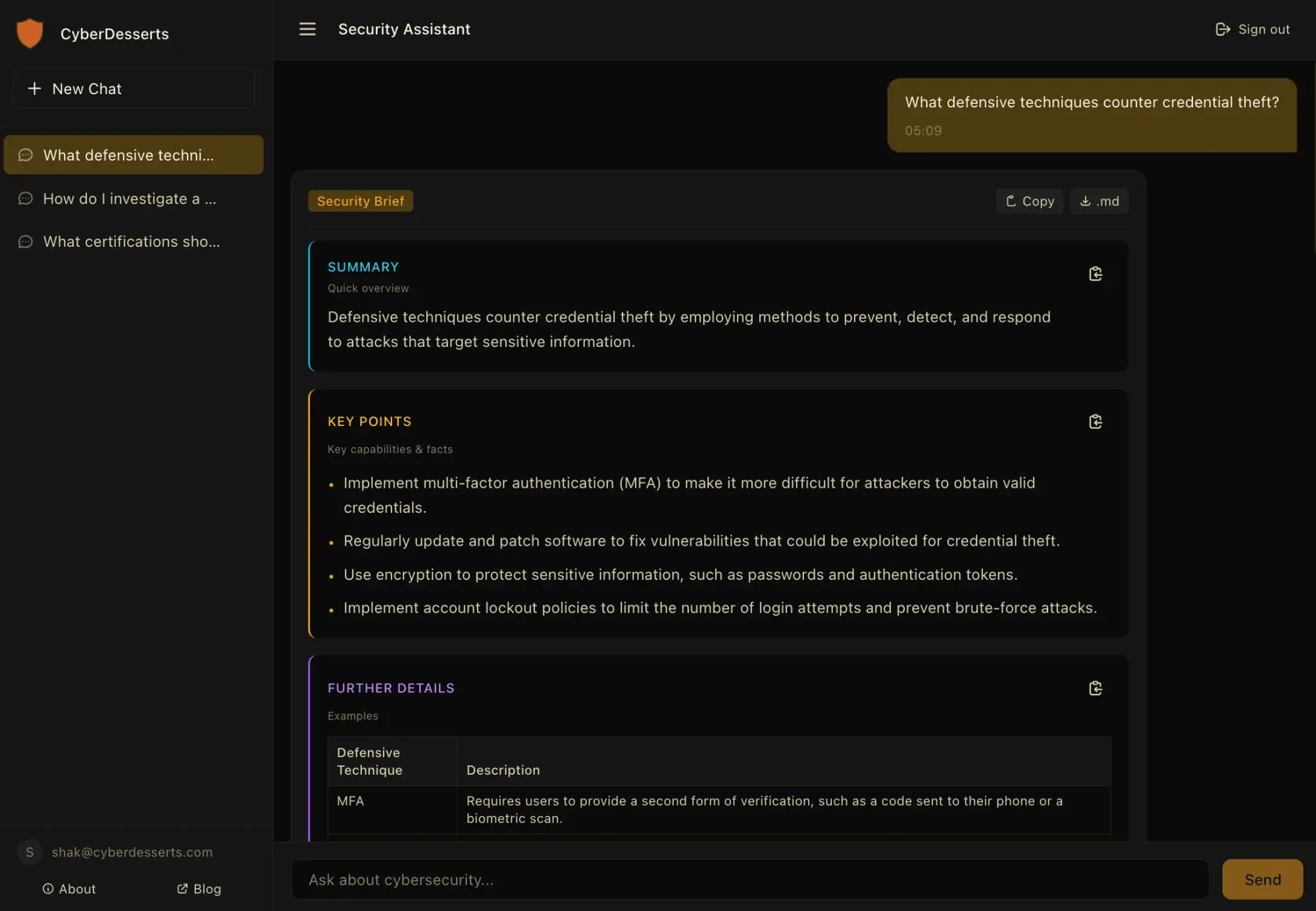

The Learning Assistant generates structured Security Briefs in response to your questions. Each brief includes:

- Summary for quick context

- Key points with actionable information

- Tables mapping techniques, phases, or comparisons

- Source citations linking back to the underlying knowledge base

- Related topics for exploring connected concepts

- Follow-up questions to guide deeper research

Ask about LockBit's ransomware-as-a-service model and you get a breakdown of the affiliate structure, initial access techniques, and persistence methods. Ask about SOC analyst certifications and you get a comparison of relevant credentials with links to career guidance.

You can export any response as markdown if you want to save it or share it with your team.

Honest Limitations

This is first iteration. Like all AI systems, responses may occasionally contain inaccuracies. The model runs on limited resources, which means there are constraints on reasoning depth that larger models would handle better.

Do not rely on this for production security decisions without validation. Verify critical information from authoritative sources. I have built in safeguards, but no AI system is perfect and I am continuing to learn as I go.

Some topics have deeper coverage than others. The knowledge base is continuously expanding, but gaps exist. If something seems off, it probably is. Trust your instincts and check the source. For me its great I can use it to interact with my blog articles in new ways.

Why This Matters Beyond the Tool

Building this reinforced something I already believed but now understand viscerally: you cannot secure what you do not understand.

Writing about AI threats is useful. Building systems that face those threats is better. The prompt injection defences I implemented are informed by attacks I actually tried. The hallucination mitigations came from watching the model fail in specific, repeatable ways.

If you are advising organisations on AI security, I would encourage you to build something. Even a small project will teach you more than reading a dozen whitepapers.

Try It Out

The Learning Assistant is free try it out chat.cyberdesserts.com

Ask it something. See what works and what does not and of course would love to hear your feedback, we just got started.

Report issues, suggest improvements, or request topics at [email protected].

Published January 2026

Get access to the Learning Assistant

Subscribe to CyberDesserts for practical security content and free access to the AI assistant.

Member discussion