When AI Writes the Code, Who Catches the Bugs?

In December 2025, Boris Cherny, creator of Anthropic's Claude Code, revealed he hadn't opened an IDE in a month. Every line of code he shipped, 259 pull requests totalling 497 commits, was written entirely by AI (Cherny, December 2025). He's not alone. Google reports AI generates over 30% of new code internally. And at Anthropic, 90% of Claude Code is now written by Claude Code itself (Pragmatic Engineer, September 2025).

That last point bears repeating. The AI coding tool is building itself.

This isn't assisted coding. This is AI writing software while humans review the output, imagine that two or three years ago ?

For security teams, that shift changes everything if there are potential vulnerabilities how do your current security practices catch them?

Subscribe to CyberDesserts for practical security content and free access to the AI assistant. You'll receive access within 24 hours of subscribing. No spam, no sales pitches.

The Capability Explosion

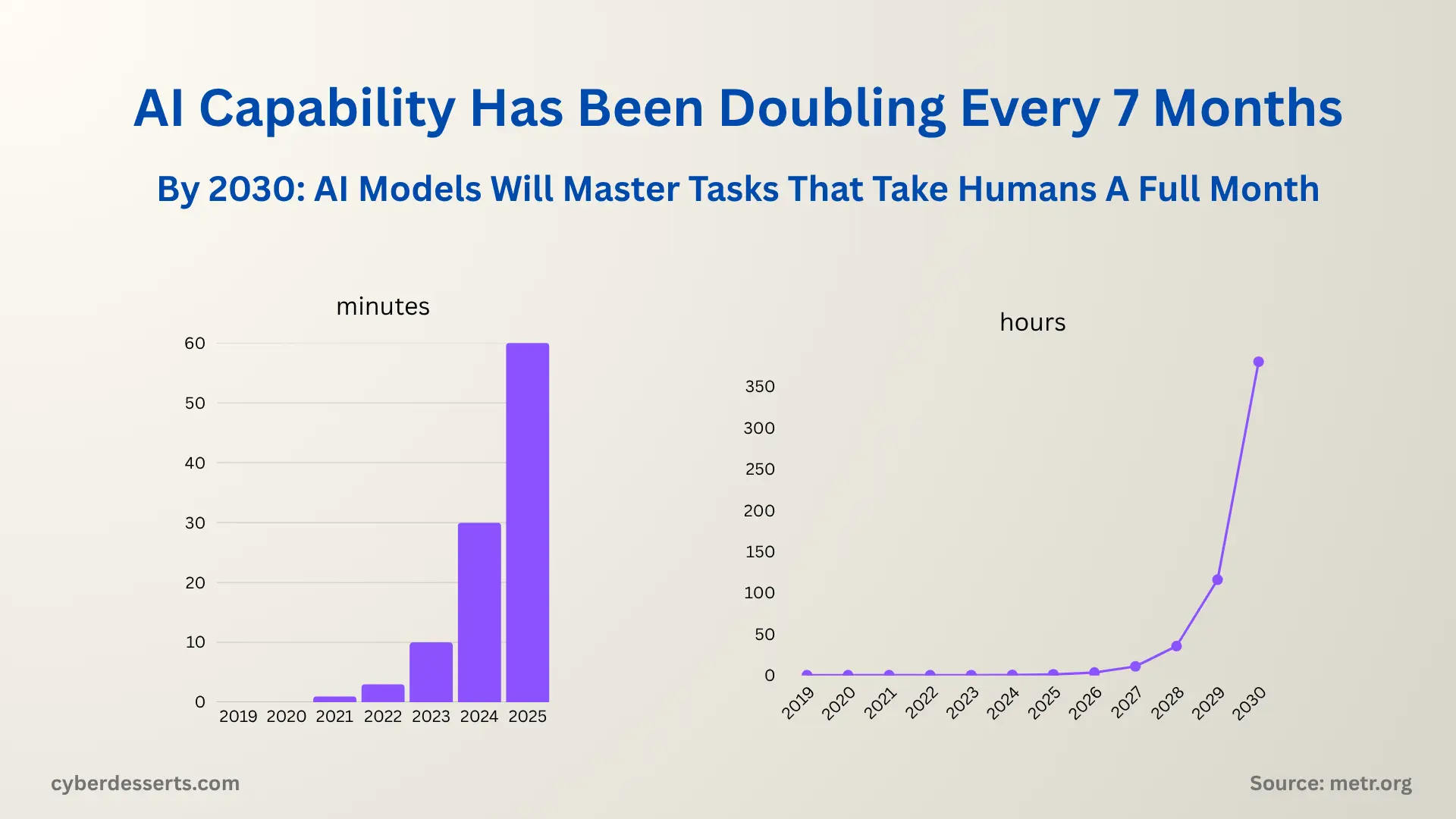

Research from METR (Model Evaluation and Threat Research) reveals a striking trend: AI coding capability doubles approximately every 7 months. In 2019, AI could handle tasks that took humans seconds. By late 2022, that extended to minutes. Today, frontier models reliably complete tasks that would take a human expert around two hours (METR, March 2025).

Extrapolate that curve and the implications become clear. Within 2-4 years, AI agents will autonomously complete week-long coding projects. By the end of the decade, month-long development tasks become feasible.

Andrej Karpathy, former Tesla AI director and OpenAI co-founder, captured this shift in his 2025 year-in-review: "2025 is the year that AI crossed a capability threshold necessary to build all kinds of impressive programs simply via English, forgetting that the code even exists.

Karpathy also coined the term: "vibe coding". Collins Dictionary named it their word of the year.

From Copilot to Colleague

The progression happened faster than most predicted.

In 2021, GitHub Copilot launched as autocomplete for programmers. Suggest the next line. Accept or reject. The human remained firmly in control.

By 2025, the dynamic inverted. Claude Code, Cursor, and similar tools don't just suggest code. They analyse entire codebases, plan implementations, execute changes across multiple files, and iterate on their own output. Cherny described Claude Code running autonomously for over 30 hours on complex tasks without significant performance degradation.

Karpathy frames this as a fundamental shift in how we should think about AI intelligence. "We're not evolving animals," he wrote. "We are summoning ghosts." The training data, architecture, and optimisation pressures that shape LLMs produce something genuinely different from human cognition. At the same time brilliant and bizarrely flawed. Capable of sophisticated reasoning one moment, fooled by simple tricks the next.

He calls this "jagged intelligence." Security teams should pay attention to that phrase.

The Security Equation

Here's where the conversation gets nuanced. The obvious concern is that AI-generated code introduces vulnerabilities at scale. That's partially true. One study found AI models introduce known security flaws approximately 45% of the time.

But the full picture is more complicated.

What AI Gets Right

LLMs are trained on secure coding patterns. Claude knows the OWASP Top 10. It consistently applies input validation, parameterised queries, and proper authentication checks. It doesn't get tired at 2am and skip the error handling. It doesn't copy-paste from Stack Overflow without checking.

Cherny shared an example: while debugging a memory leak, he started the traditional approach of connecting a profiler and manually checking heap allocations. His colleague simply asked Claude to generate a heap dump and analyse it. Claude identified the issue on the first attempt and submitted a fix.

For certain classes of vulnerability, consistent AI-generated code may actually reduce risk compared to fatigued human developers cutting corners under deadline pressure.

What AI Gets Wrong

The problem isn't obvious errors. It's confident, subtle mistakes that pass every automated check.

Karpathy's "jagged intelligence" means AI can solve complex algorithmic problems while making elementary logical errors in the same session. It doesn't understand your threat model, your compliance requirements, or the business context that determines whether a design decision is safe or catastrophic.

The METR productivity study revealed something troubling: developers using AI tools believed they were 20% faster. Objective measurement showed they were actually 19% slower. More concerning, they weren't reading the diffs. They accepted AI suggestions without review, trusting code they didn't fully understand (METR, July 2025).

When Cherny was asked about vibe coding for critical systems, he was direct: "It's definitely not the thing you want to do all the time." Vibe coding works for prototypes and throwaway code. Production systems require a different approach and that approach is evolving all the time as AI gets better.

The New Attack Surface

The security implications extend beyond code quality.

Shadow AI meets shadow code. Employees are vibe-coding internal tools, dashboards, and automation scripts without security review. They're solving immediate problems, but those solutions now run in production with unknown vulnerability profiles.

Prompt injection becomes a supply chain risk. If AI coding tools are processing untrusted input, attackers may be able to influence the code they generate.

Context leakage compounds the problem. Developers paste proprietary code, credentials, and architectural details into AI prompts. That data flows to external APIs, creating exposure most security teams aren't monitoring.

For a broader view of AI-specific threats, see our AI Security Threats guide.

What Security Teams Should Do Now

The shift to AI-generated code requires adapted security practices, not abandoned ones. Here's where to focus.

Audit your AI tooling. Most security teams don't evaluate AI coding tools as part of their security posture. They should. What models are developers using? What data is being sent to external APIs? Are there guardrails configured?

Assume the code wasn't reviewed. The METR study showed developers accept AI suggestions without reading them. Your code review process needs to account for this reality. Consider requiring explicit attestation that AI-generated code was actually reviewed, not just approved.

Update your threat model. AI-generated code changes the vulnerability profile. Fewer obvious injection flaws, more subtle logic errors. Fewer copy-paste mistakes, more confident-but-wrong implementations. Your testing approach should adapt.

Monitor for shadow AI coding. If employees can access AI coding tools, they're using them. Visibility into what's being built and deployed matters more than blanket prohibitions.

Train developers on AI-assisted review. Reviewing AI-generated code requires different skills than reviewing human code. The errors are different. The patterns are different. Developers need to know what to look for, interpret the capabilities of the agent and how to get the best results.

Simple test drop a (dummy) api key into the prompt window, Claude code does a good job of catching this and asking you to rotate the keys.

The Open Question

Is AI-generated code more or less secure than human-written code?

The honest answer: we don't know yet. The data is early and contradictory. Some organisations report fewer basic vulnerabilities. Others describe debugging nightmares from subtle AI-introduced flaws.

What's certain is that the volume of AI-generated code is increasing exponentially. Whether that improves or degrades your security posture depends entirely on how you adapt.

Cherny put it simply: "Software engineering is radically changing, and the hardest part even for early adopters and practitioners like us is to continue to re-adjust our expectations."

Security teams face the same challenge. The tools are changing. The code is changing. The question is whether your practices are changing fast enough to keep pace.

Summary

AI coding capability is doubling every 7 months. We've crossed from AI-assisted development to AI-authored software. Claude Code now writes 90% of its own codebase. Google, Anthropic, and leading developers ship code written almost entirely by AI. Ultimately coders are still engineering, the tools have changed on how we build code and at the same time the bar has been lowered on who can engineer.

The recursive nature of this shift matters: AI tools are building better AI tools, accelerating the capability curve further.

This shift creates new security challenges:

- Review gaps: Developers accept AI code without reading it

- Jagged intelligence: AI makes confident, subtle errors that pass automated checks

- Shadow AI: Employees vibe-code tools without security oversight

- Context leakage: Proprietary data flows into AI prompts

It also creates potential benefits:

- Consistency: AI applies secure patterns without fatigue-induced shortcuts

- Coverage: AI catches certain vulnerability classes reliably

The net effect depends on how security teams adapt. Audit AI tooling. Assume code wasn't reviewed. Update threat models for AI-specific failure modes. Monitor for shadow AI development.

The capability curve isn't slowing down. Your security practices need to keep pace.

Last updated: January 2026

AI coding tools are evolving monthly. Subscribers receive notifications when major developments impact security, plus weekly practical insights. No sales pitches, no fluff.

References and Sources

- Cherny, B. (December 2025). X post on Claude Code productivity. 259 PRs, 497 commits, 40k lines added/removed, all AI-generated.

- Karpathy, A. (December 2025). 2025 LLM Year in Review. Discussion of vibe coding, jagged intelligence, and Claude Code as paradigm shift. karpathy.bearblog.dev

- METR (March 2025). Measuring AI Ability to Complete Long Tasks. AI task-duration capability doubles approximately every 7 months.

- METR (July 2025). Measuring the Impact of Early-2025 AI on Experienced Open-Source Developer Productivity. Developers 19% slower with AI despite believing 20% faster.

- Orosz, G. (September 2025). How Claude Code is Built. The Pragmatic Engineer. 90% of Claude Code written by itself; 80%+ of Anthropic engineers use Claude Code daily.

- MIT Technology Review (December 2025). AI coding is now everywhere. 65% of developers using AI tools weekly. Claude Code runs 30+ hours autonomously.

- Collins Dictionary (2025). Named "vibe coding" Word of the Year.

- Globe and Mail (December 2025). AI models introduce known security flaws approximately 45% of the time.

Member discussion